Introduction

Vision-language models (VLMs) have rapidly advanced the field of multimodal AI, enabling systems that can interpret images, answer visual questions, and generate descriptive captions. Yet despite these impressive capabilities, current VLMs exhibit persistent limitations that hinder their reliability in complex visual reasoning tasks. Many struggle with spatial reasoning, failing to accurately determine relative positions, sizes, and relationships between objects in a scene. They are prone to hallucination, confidently describing objects or attributes that don't exist in the image, particularly when queries involve fine-grained details or ambiguous references. Furthermore, standard VLMs often operate as "black boxes," producing answers without revealing the underlying logic, making it difficult to diagnose errors or build trust in high-stakes applications. These models perform well on straightforward recognition tasks but falter when a query requires multi-step inference: identifying relevant regions, filtering candidates based on attributes, and synthesizing information across the image.

Addressing these challenges requires a paradigm shift in how we prompt and interact with VLMs, one that encourages explicit, step-by-step reasoning rather than single-shot predictions. This is where Chain-of-Thought (CoT) prompting emerges as a powerful technique, enabling VLMs to decompose complex visual tasks into interpretable reasoning traces that improve both accuracy and transparency.

What is CoT Prompting in VLMs?

Chain-of-Thought (CoT) prompting guides a model to think out loud by generating intermediate logic or reasoning steps before it arrives at a final answer. This technique first originated in text-only LLMs, where it proved remarkably effective at improving performance on complex reasoning tasks, such as mathematical proofs and action planning. The core idea is simple and mimics how students learn in school: instead of asking a model to jump straight to an answer, you prompt it to show its work through a series of steps.

When applied to VLMs, the effect of CoT prompting extends into the visual dimension. Apart from text, the model can describe what it observes in an image, such as important visual cues, reasons through them step-by-step, and only then produces its final response. A simple example would be a query like “Which object does the phrase ‘small green ball’ refer to in this image?” Without CoT, a VLM might guess based on superficial pattern matching, and might make a mistake if there is more than one green ball in the image. With CoT, the model can enumerate the green objects it sees, compare their sizes, and logically conclude which one qualifies as “small”. This mirrors how a human would approach the problem, systematically narrowing down candidates and eliminating obvious wrong answers, rather than just taking a shot in the dark.

CoT provides numerous benefits to model training, such as making model behaviour interpretable, since you can trace exactly why the model chose a particular answer, and identify logical flaws, fallacies, and hallucinations much more easily. It also tends to improve model accuracy, as the explicit reasoning process forces the model to attend to relevant details rather than relying on shortcuts. Additionally, CoT allows the model to break a complex problem down into more manageable subproblems and tackle them step-by-step, reducing the risk of total hallucination.

CoT prompting in VLMs has recently gained traction in both research and open-source communities. Several VLMs now support or actively incorporate step-wise reasoning. In the next section, we’ll dive into how CoT can be applied in two practical tasks: phrase grounding and visual question answering.

CoT Strategies for Phrase Grounding

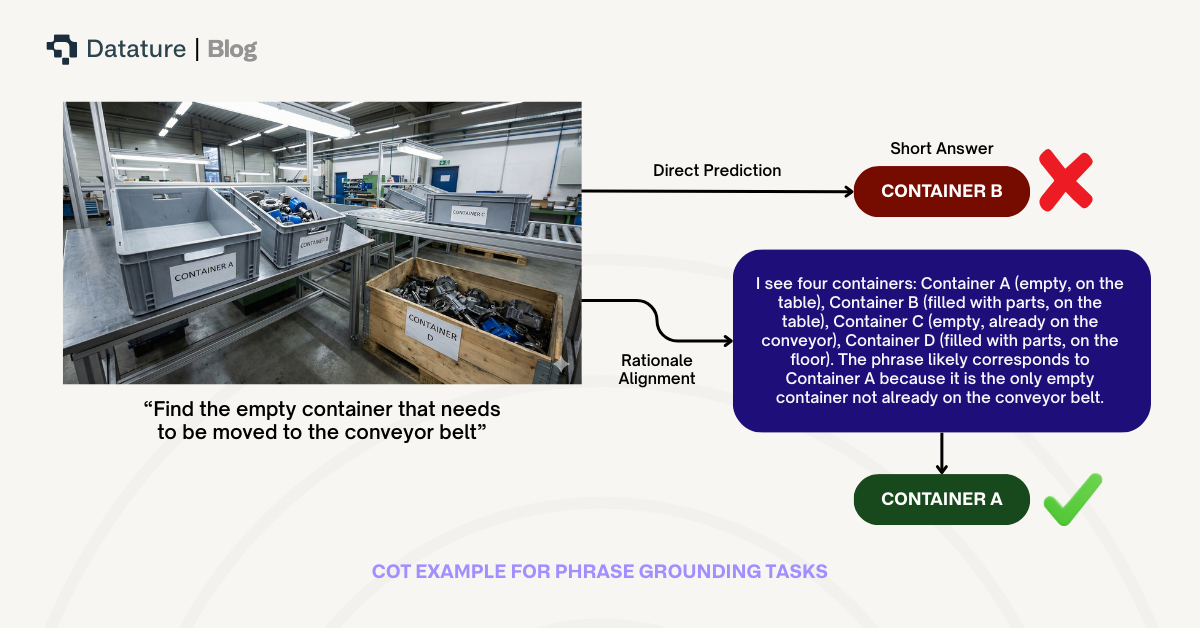

Phrase grounding (also known as referring expression comprehension) is the task of locating a region in an image described by a natural language phrase. For example, a system might be asked: "Find the empty container that needs to be moved to the conveyor belt." Solving this requires understanding the phrase ("empty container" that "needs to be moved") and then identifying the corresponding visual evidence in the image (the correct object and region).

CoT prompting can significantly enhance phrase grounding in VLMs by having the model break the problem into sub-tasks:

- Understand the phrase: The model first analyzes what is needed from the query phrase: e.g. identify a container (object type) that is empty (state attribute) and needs to be moved to the conveyor (task context). This parsing might be implicit but is part of the reasoning chain.

- Scan the image for candidates: The model then analyzes the image and tries to match what it sees. The exact approach might vary, but would be something similar to internally enumerating all containers visible in the scene, filtering out any that appear full, then checking which of the remaining containers are positioned away from the conveyor belt. For instance, the reasoning output could be: "I see four containers: Container A (empty, on the table), Container B (filled with parts, on the table), Container C (empty, already on the conveyor), Container D (filled with parts, on the floor). The phrase likely corresponds to Container A because it is the only empty container not already on the conveyor belt."

- Locate the chosen candidate: Once the model has selected its candidate, it outputs the grounded result, such as a bounding box around Container A. With CoT, the model can also explain why that region was specifically chosen ("the bounding box is [x1, y1, x2, y2] because that encompasses the empty container on the table that still needs to be moved to the conveyor").

In practice, modern CoT-enabled VLMs handle much of this reasoning automatically. For example, NVIDIA’s Cosmos Reason1-7B has been trained on physical common sense and embodied reasoning datasets, enabling it to understand space, time, and fundamental physics while generating step-by-step reasoning about real-world scenarios. If we prompt Cosmos Reason1 with a video or image and a question about physical interactions, we can encourage explicit chain-of-thought by including the format instruction in the system prompt: "Answer the question in the following format: <think>\nyour reasoning\n</think>\n\n<answer>\nyour answer\n</answer>". In response, Cosmos Reason1 produces a detailed reasoning trace explaining its understanding of the physical scene, followed by a grounded decision or answer.

To illustrate, given a video of a robot arm approaching objects on a table and prompted with "What should the robot do next to pick up the red cup?", the model's output might be:

<think>

The scene shows a robotic arm positioned above a table with several objects.

I can see a red cup near the center, a blue bowl to the left, and some papers on the right.

The gripper is currently open and hovering approximately 20cm above the table surface.

To pick up the red cup, the arm needs to:

1) lower toward the cup while maintaining alignment,

2) position the gripper around the cup's body,

3) close the gripper with appropriate force for a cylindrical object.

The cup appears empty based on how light interacts with it, so minimal grip force is needed.

</think>

<answer>

Move the arm down toward the red cup, align the gripper around the cup body, then close the gripper gently to grasp it.

</answer>In this output, Cosmos Reason1 not only provided the action recommendation but also reasoned through the spatial relationships, object properties, and physical constraints. Essentially, the model analyzed: "What objects are present and where? What state is the robot in? What physical steps are needed? What properties of the target object matter?" All these reasoning steps happen within the model's chain-of-thought, triggered by the reasoning prompt format. The end result is highly interpretable: a developer can see exactly how the model understood the scene and arrived at its decision.

Real-world embodied reasoning systems are evaluated on benchmarks like RoboVQA, BridgeDataV2, HoloAssist (for ego-centric human demonstration), and autonomous vehicle driving scenarios. Cosmos Reason1-7B has achieved strong performance on these benchmarks. For instance, it reaches approximately 87% accuracy on robotic visual question-answering tasks, roughly doubling the scores of its Qwen2.5-VL 7B baseline on physical reasoning benchmarks. Such performance is attributable to its training on physical common sense data combined with reinforcement learning for embodied reasoning.

A query like "Is it safe for the forklift to proceed?" might require understanding spatial clearances, predicting object trajectories, and assessing collision risks. A chain-of-thought approach ensures the model explicitly reasons through each safety consideration. In practical applications, CoT reasoning for physical AI means better safety and explainability. Imagine an autonomous vehicle scenario where the system needs to decide whether to proceed through an intersection. A CoT-capable VLM like Cosmos Reason1 can internally think: "Pedestrians: none visible in crosswalk. Traffic light: green. Oncoming vehicles: one car approaching but sufficient gap. Road condition: dry. Decision: safe to proceed." It then outputs the decision and reasoning as justification. This level of transparency is what CoT reasoning for physical AI can offer.

CoT Strategies for Visual Question Answering

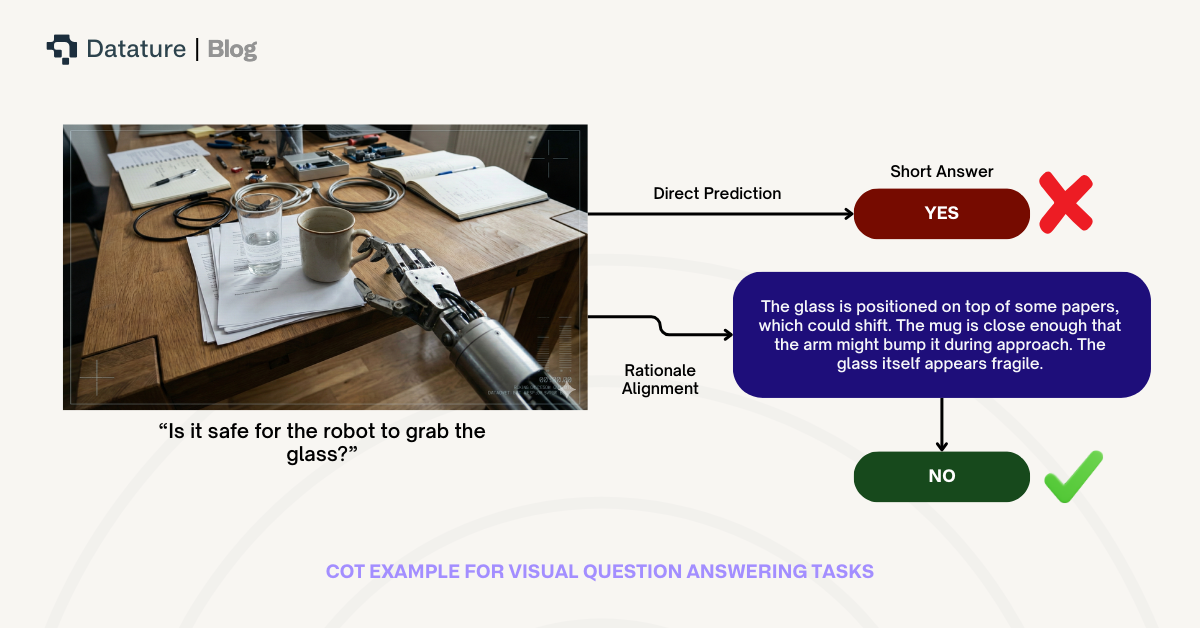

Visual Question Answering (VQA) is the task of answering natural language questions about an image or video. On the surface, it might seem straightforward for a modern VLM. After all, many models can produce a decent answer in one go. However, questions about complex scenes, physical interactions, or multi-step reasoning benefit greatly from chain-of-thought reasoning to ensure the model actually understands what's happening before jumping to an answer.

An example would be a video showing a robot arm reaching toward objects on a cluttered table, with the question "Is it safe for the robot to grab the glass?" A CoT-enabled approach might involve these steps:

- Identify key elements: The model first notes the main objects and their positions. "I see a robotic gripper, a glass near the edge of the table, a stack of papers underneath the glass, and a coffee mug to the right."

- Analyze physical relationships and risks: Next, it reasons about what could go wrong. "The glass is positioned on top of some papers, which could shift. The mug is close enough that the arm might bump it during approach. The glass itself appears fragile."

- Form the answer: Finally, the model synthesizes this into a response. "No, it's not safe. The papers underneath could cause the glass to slide, and the nearby mug creates a collision risk."

In a vanilla VQA model without CoT, there's a risk it might output a simple "Yes" or "No" without actually reasoning through the physical constraints. By thinking through the scene step by step, the model catches potential hazards that a quick assessment might miss. This is especially important for physical AI applications where wrong answers have real-world consequences.

Cosmos Reason1 can similarly be prompted to produce explicit reasoning traces for VQA tasks. By including the format instruction in your system prompt, you get structured output that separates the thinking process from the final answer:

<think>

Looking at the scene, I can see a forklift at the entrance of aisle 3.

There's a person visible about 10 meters down the aisle, walking away from the forklift.

The aisle width appears sufficient for the forklift but not for passing a pedestrian safely.

The person hasn't noticed the forklift yet.

Standard safety protocol would require the pedestrian to clear the aisle or acknowledge the forklift before proceeding.

</think>

<answer>

No, the forklift should not proceed.

There is a pedestrian in the aisle who hasn't acknowledged the forklift.

The operator should sound the horn and wait for the person to move to a safe position.

</answer>CoT in VQA also aids debugging and error analysis. If a model gives a wrong answer, having the reasoning trace lets you pinpoint where things went wrong. For example, if the model said "Yes, the forklift should proceed" when it shouldn't have, the <think> block might reveal "I don't see any obstacles in the path" when there clearly was a pedestrian. This tells you the issue is on the perception side (the model didn't notice the person) rather than the reasoning side (the model saw the person but made a bad judgment call). Without the reasoning trace, you would have no idea which component failed.

Limitations of CoT in Vision-Language Tasks

While chain-of-thought prompting brings interpretability and often better performance, it's not without its challenges. Here are some known limitations and edge cases to keep in mind:

- Coarse or Incorrect Reasoning: VLMs today aren't guaranteed to produce correct chains of thought. A model might generate reasoning that sounds plausible but is actually based on a misperception. For instance, it could say "The phrase likely refers to the man on the left of the image wearing a blue hat", even if that man isn't actually wearing a hat. The model might be hallucinating details due to visual confusion. Research has found that current VLM CoT outputs are often coarse-grained, disorganized, or overly verbose, and not reliably structured. This makes it hard to trust the reasoning without some form of verification. Efforts like Chain-of-Thought Consistency evaluation try to measure how often the reasoning is actually correct and leads to the right answer, since a model might get the right answer for the wrong reasons, or vice versa.

- Evaluation Complexity: Unlike a single answer (like a caption or a selected region) which can be directly compared to ground truth, evaluating a reasoning chain is much more nuanced. There isn't always a "correct reasoning" to compare against. The quality of a CoT is typically assessed through proxies, such as whether the final answer is correct, or through human judgment of the reasoning steps. Some advanced methods (like the Process Reward Models used in InternVL and research from BAAI) actually train a separate model to judge whether each reasoning step makes logical sense. This works, but it adds complexity to the overall system.

- Longer Inference Time: Generating a chain-of-thought means the model outputs more tokens (the thought process plus the answer, instead of just the answer). This naturally takes more time and computational resources during inference. In real-time applications, there's often a trade-off between answer quality and speed. Techniques like step-level beam search and self-consistency (majority voting across multiple CoT samples) have been explored to balance this, but they add their own complexity.

- Potential to Confuse End-Users: If you expose the chain-of-thought to end-users (for example, having the assistant explain its reasoning), there's a risk of overwhelming them with technical details. It's important to tailor how the CoT is presented depending on the audience. For developers, the raw <think>...</think> output is incredibly useful for debugging. But for an end-user interface, you might want to show only a simplified explanation. Also, because the reasoning is generated text, it might include uncertain language or minor errors that could erode user trust if not handled carefully.

- Vision-LLM Alignment Issues: Sometimes the model's language reasoning can overshadow the actual visual evidence. A model might rely too heavily on common sense priors rather than what's actually in the image. For example, given an ambiguous image and a question, the CoT might fill in details from general knowledge ("Usually, people wear hats at the beach to avoid sun...") that aren't actually observable in the scene. This is a form of hallucination specific to multimodal models, where the chain-of-thought drifts away from what is visually verifiable. Ongoing research into Visual-CoT alignment is looking at ways to ground each reasoning step in the image, perhaps by requiring the model to cite specific image regions as evidence for each thought.

.png)

- Training Data and Format: Producing good CoTs often requires training data that includes rationales or explanations. Models like Cosmos Reason1 were trained on millions of such reasoning traces. If you fine-tune a VLM on a new domain without similar data, it might lose some of its CoT ability, or the reasoning might become less relevant to the task. It's also crucial that the format of your prompts (special tokens, tags, etc.) matches what the model was trained on. For example, Cosmos Reason1 knows to produce reasoning inside <think> tags because it was trained with that format. Using CoT prompts in a way the model isn't familiar with could yield incoherent outputs.

Despite these challenges, the overall trend is that carefully leveraging CoT makes VLMs more powerful and reliable. Techniques like Direct Preference Optimization (DPO) and RL fine-tuning (used by teams working on InternVL and similar models) specifically target improving the factuality and coherence of reasoning steps. NVIDIA's Cosmos uses reinforcement learning with straightforward rewards to ensure the <think> block contains correct reasoning. For example, the reward signal checks whether the reasoning led to the right answer and whether it followed the required format. These innovations are gradually making CoT outputs more trustworthy.

Practical Tips and Recommendations

To effectively incorporate CoT into your VLM, especially for Cosmos Reason1-7B, you should:

- Use enough output tokens. The reasoning traces can get long, especially for complex scenes. NVIDIA recommends setting max tokens to 4096 or higher to avoid truncating the chain-of-thought mid-reasoning.

- Add timestamps to video frames. The model recognizes timestamps added at the bottom of each frame, which helps it give precise temporal answers like "At 00:03, the vehicle begins to brake."

- Ask for explanations explicitly. Even with the <think>/<answer> format, you can further improve responses by asking things like "Explain your reasoning about the safety risks" in your prompt.

- Consider the model's strengths. Cosmos Reason1 excels at physical common sense, spatial relationships, and embodied decision-making. It's particularly good at questions like "What should happen next?", "Is this action safe?", and "What went wrong here?"

In the next section, we’ll show you how to finetune, deploy, and run predictions using your model with CoT enabled.

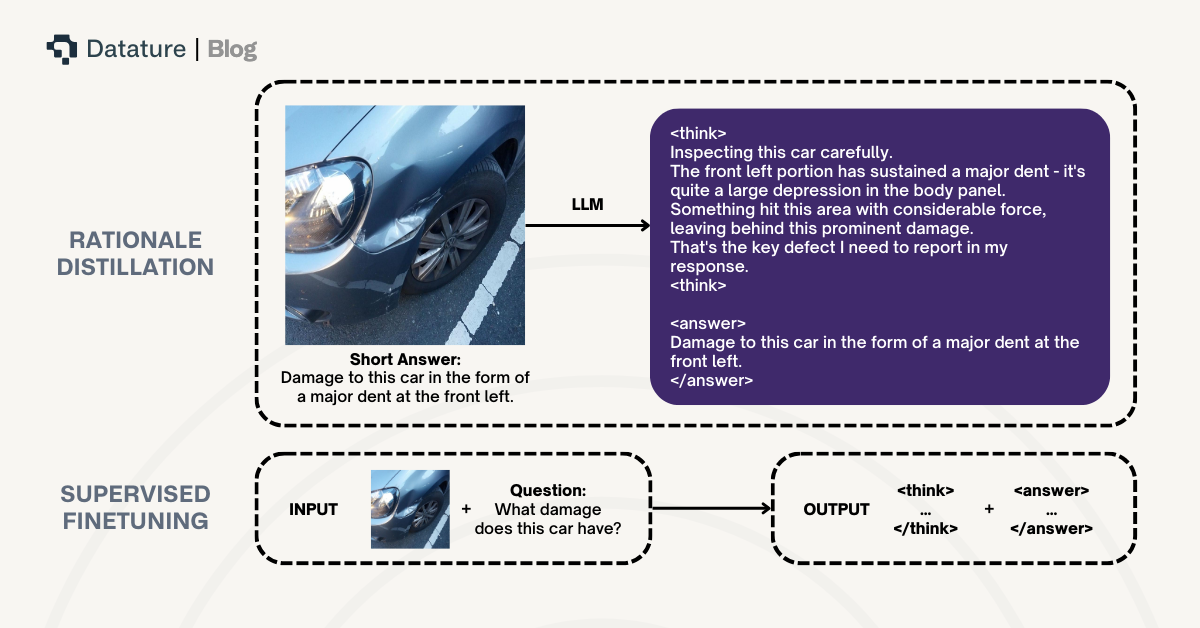

Finetuning Your VLM With CoT

To finetune a VLM with CoT capabilities, you will first need to transform your dataset to include reasoning alongside the ground truth answers. You can do this step of rationale distillation by using off-the-shelf models such as GPT-5o, Claude, or Gemini to generate “think” captions. You can then concatenate both the thinking and the answers, keeping them distinct by using <think></think> and <answer></answer> tags.

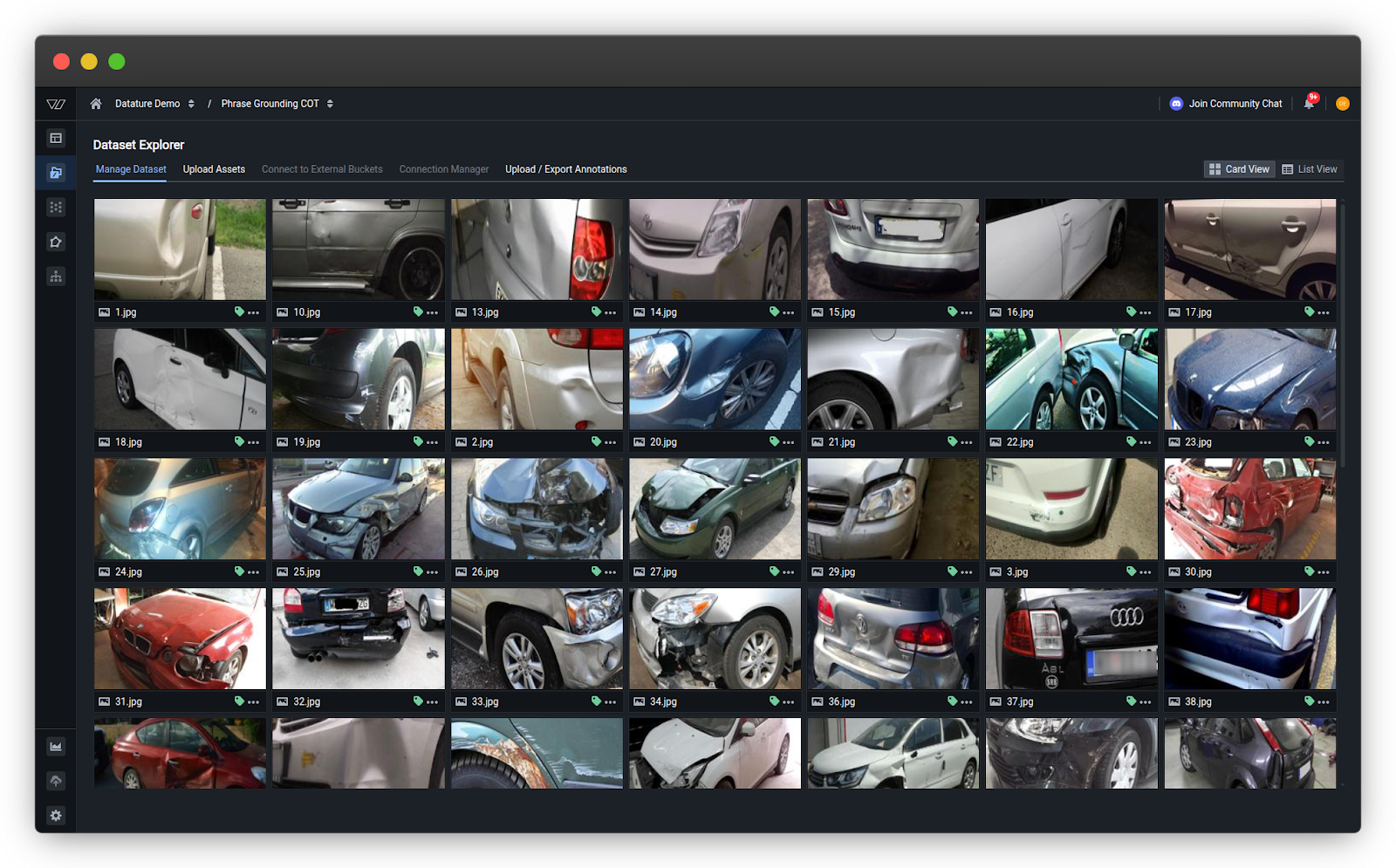

In our example, we will be using a dataset on vehicle damage and training Cosmos Reason1 on a phrase grounding task to correctly identify and locate damages on images of cars. The dataset only contains 70 images to showcase that CoT can be extremely helpful even on small datasets.

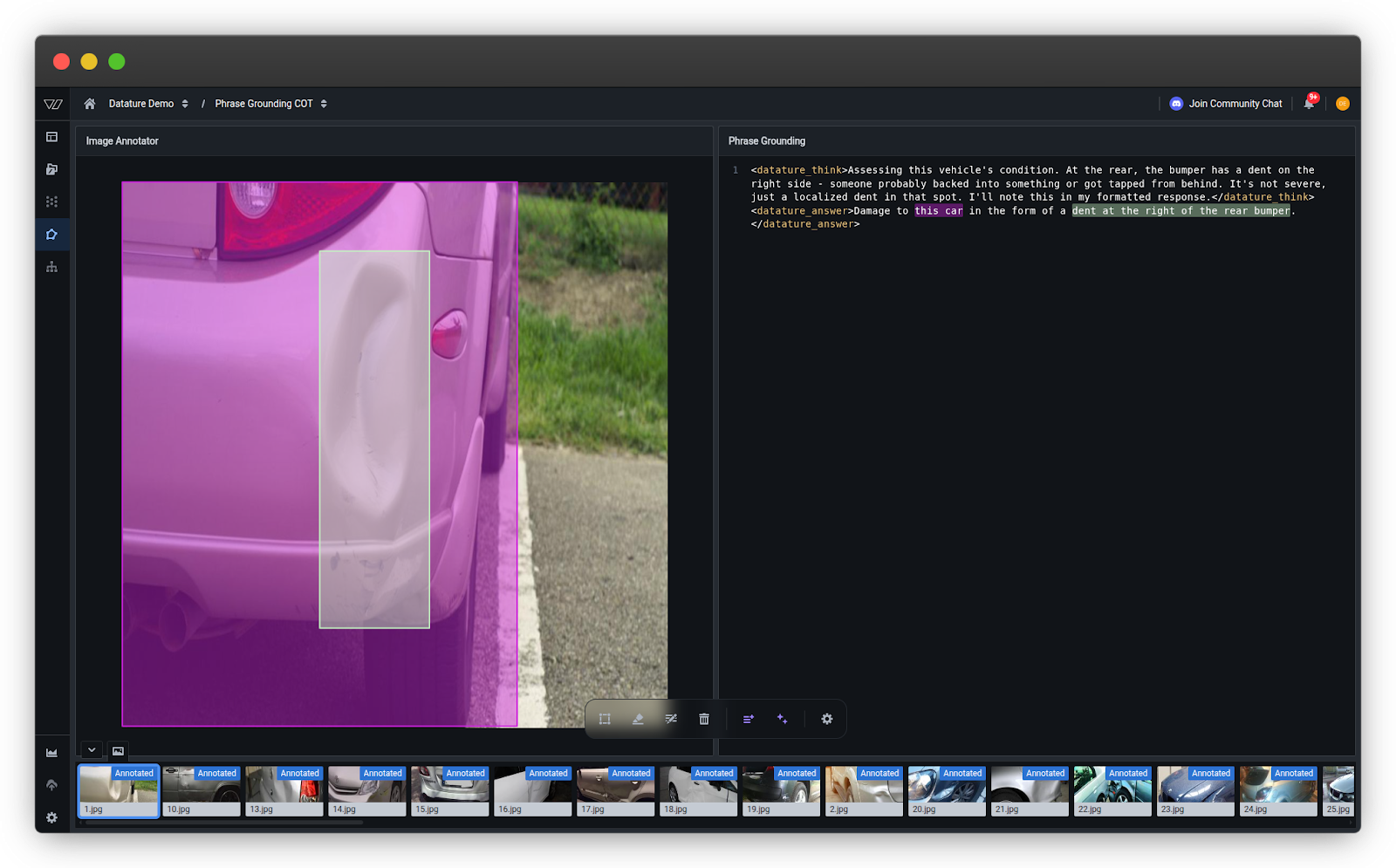

Dataset Annotation

When finetuning on Datature Vi, you will need to use special <datature_think></datature_think> and <datature_answer></datature_answer> tags. This is our common format that will be converted into model-specific think and answer tags during the finetuning process.

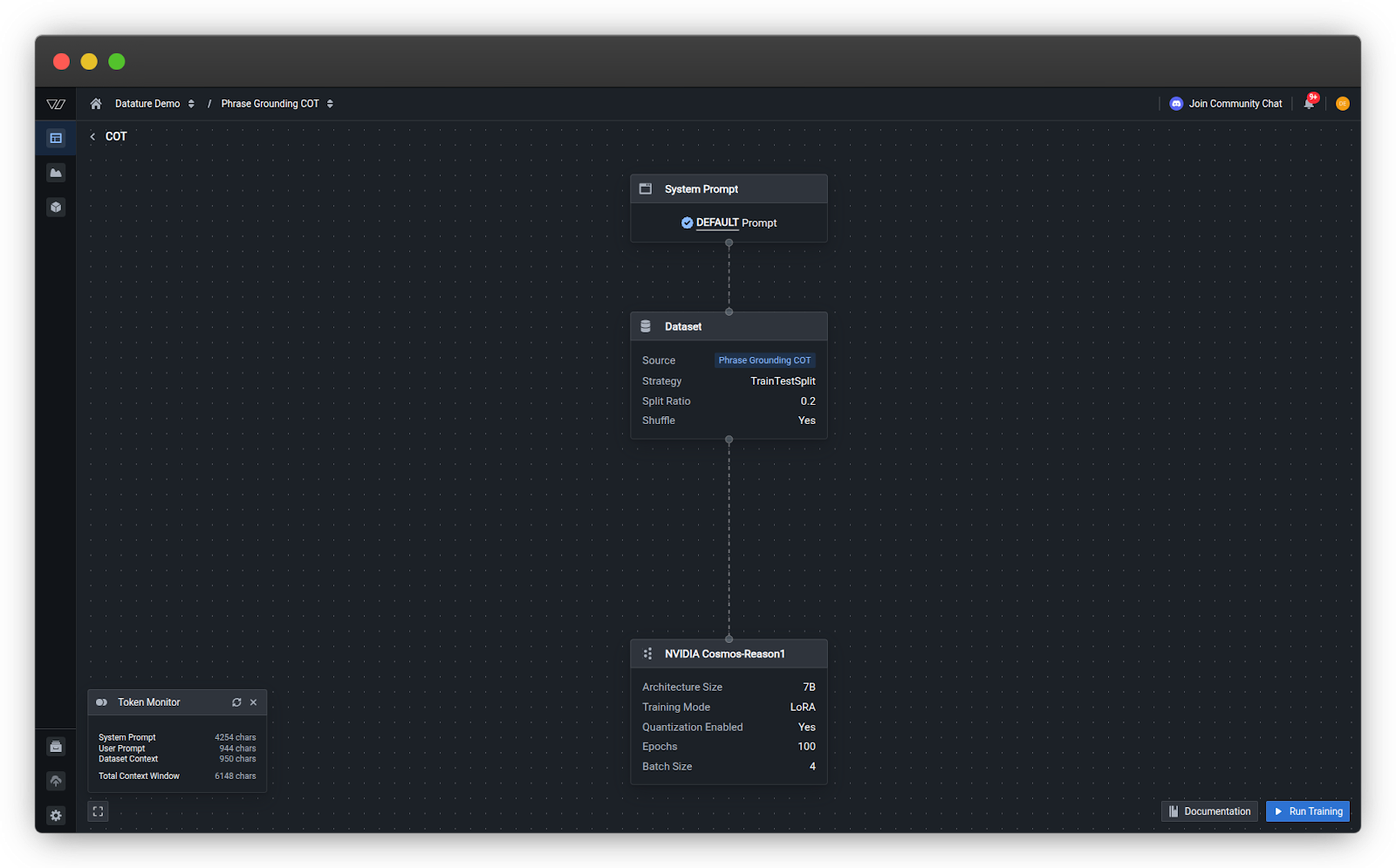

Model Training

Once all the think tags have been prepended to the annotations, you can create a simple workflow to use your new dataset and a model architecture of your choice. There is no need to enable any toggle buttons for CoT, as our training pipeline will automatically infer through the presence of the think tags.

Once your training has started, you will be able to view the evaluation images in real-time as the model goes through evaluation checkpoints. This is a good way to visualize model performance and monitor whether the model is reasoning correctly.

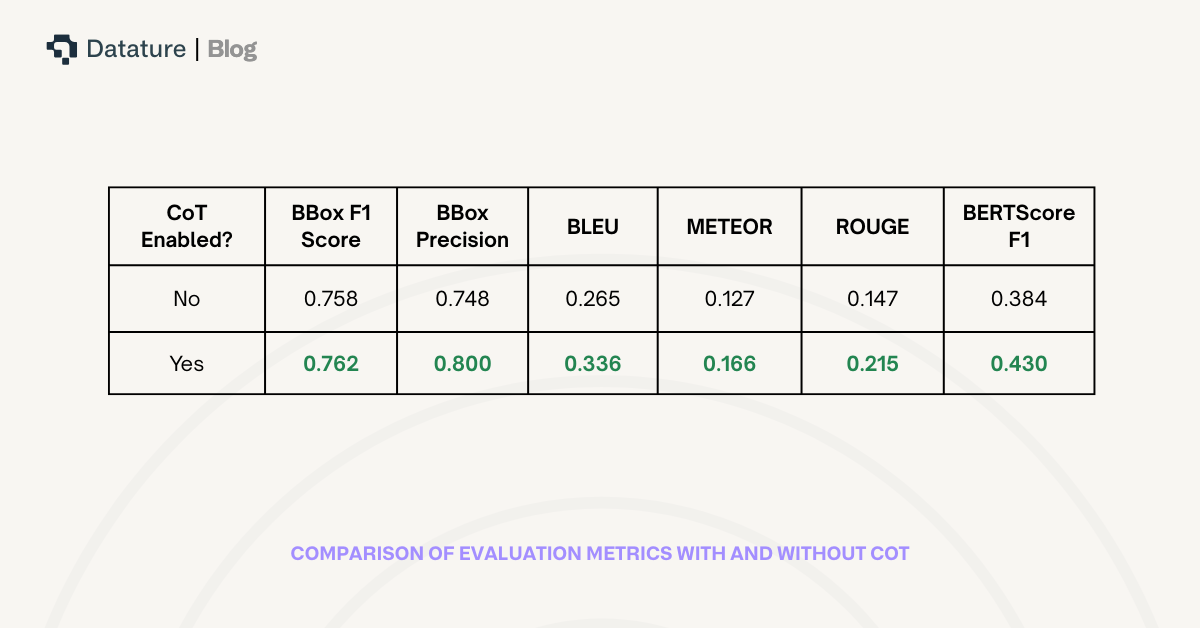

CoT Comparison Study

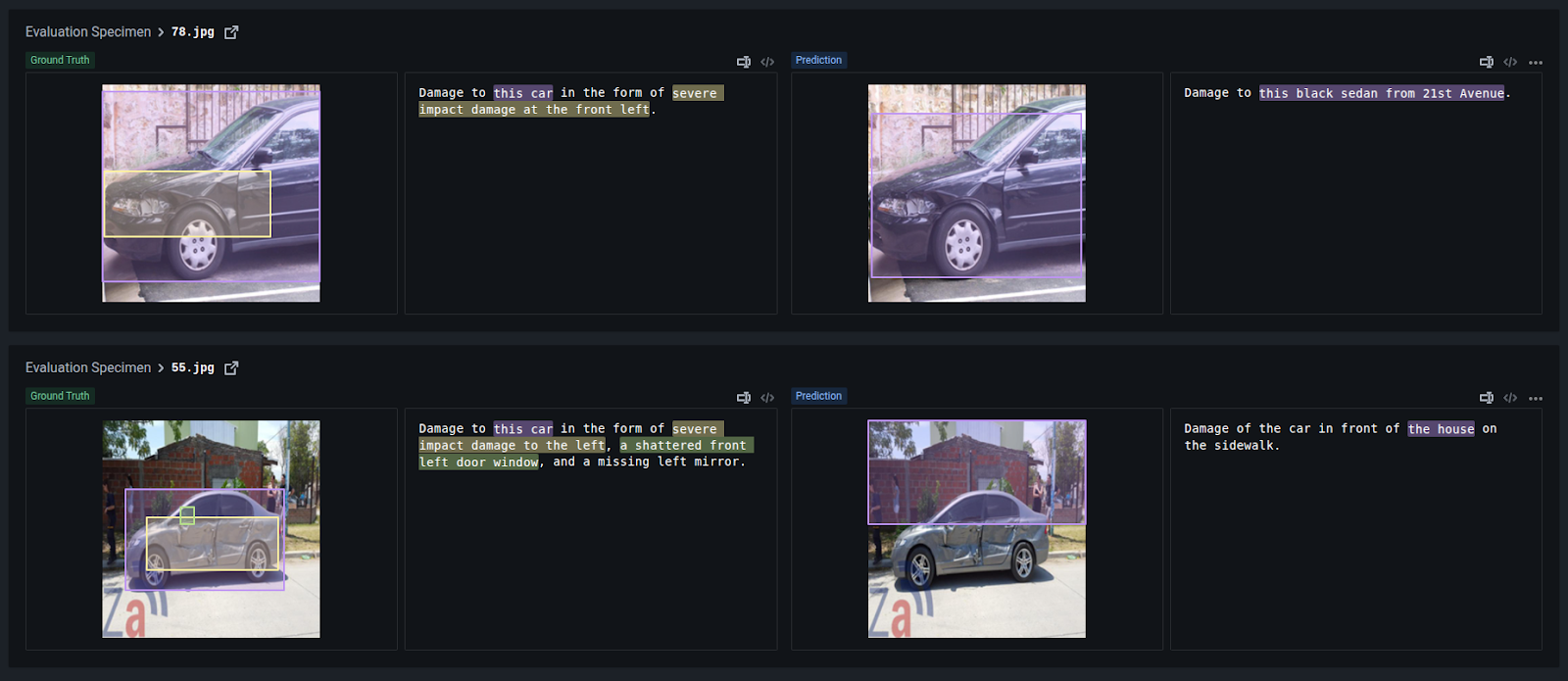

To verify whether CoT reasoning actually improves model performance for phrase grounding tasks, we ran two separate training experiments: one with CoT enabled and one without. Both models were trained on the same dataset with similar hyperparameters.

Without CoT, the model predictions are shallow and often miss the actual damage details. For instance, in the first evaluation specimen, the ground truth describes "severe impact damage at the front left," but the prediction just says "Damage to this black sedan from 21st Avenue", hallucinating irrelevant details (a street name) while completely ignoring the actual damage characteristics.

In the second evaluation specimen, the ground truth specifies "shattered front left door window" and "missing left mirror", but the prediction only gives a vague "Damage of the car in front of the house on the sidewalk", which describes the scene location rather than the damage itself.

With CoT, the model explicitly reasons through what it observes before producing the final answer. The <datature_think> blocks show the model articulating observations like "The front end is totally wrecked, and the right side of the vehicle is heavily compromised" and "seems concentrated around that specific region of the front bumper and above the lower right tire."

%20(1).png)

This reasoning step forces the model to:

- Actually attend to the relevant regions of the image

- Articulate specific visual features before summarizing

- Produce final predictions that are more closely aligned with the ground truth damage descriptions

The results demonstrate that CoT acts as a form of "self-grounding". By requiring the model to verbalize its reasoning, it anchors the output in actual visual evidence rather than generating plausible-sounding but inaccurate descriptions.

Deploying Your VLM With CoT

To test your trained VLM on unseen data, you can use our Vi SDK to host the model on your own device with a GPU, then make predictions by feeding in images. You will need to ensure that you set the cot flag to True so that the model knows that it needs to output in that format, otherwise the model will default to outputting the answer directly.

from vi.inference import ViModel

secret_key = "YOUR_DATATURE_VI_SECRET_KEY"

organization_id = "YOUR_DATATURE_VI_ORGANIZATION_ID"

run_id = "YOUR_DATATURE_VI_RUN_ID"

model = ViModel(

secret_key=secret_key,

organization_id=organization_id,

run_id=run_id

)

result = model(source="path/to/your/image", cot=True, stream=False)

print(result)If the model architecture you’ve trained is Cosmos Reason1, you can also use NVIDIA NIMs to deploy via a containerized approach.

Try It On Your Own Data

If you want to try out finetuning a VLM with CoT on your own custom dataset, head over to Datature Vi and create a free account. You can upload some images, annotate them with the think and answer tags, then train a model with just a few clicks.

If you want to learn more about customization options, such as chaining think and answer tags, adjusting model hyperparameters, or how you can automate the transformation of answers to the CoT format, our Developer’s Documentation.

Our Developer’s Roadmap

CoT support in Datature Vi currently centers on Cosmos Reason1, but this is just the starting point. We're working to bring CoT capabilities to other reasoning-capable VLMs, giving developers more flexibility to choose the model that best fits their use case, whether that's optimizing for speed, accuracy, or domain-specific performance.

One challenge we're tackling is that not all models reason the same way. Some structure their thinking with explicit tags or delimiters, others produce freeform reasoning, and some interleave observations with conclusions. These different CoT "flavors" mean we can't take a one-size-fits-all approach to parsing and presenting reasoning traces. Our goal is to abstract away these differences so that developers get a consistent interface regardless of which model they're running under the hood.

There's also a practical performance consideration. CoT prompting generates significantly more tokens than standard inference since the model is essentially thinking out loud before answering. For latency-sensitive applications, this overhead matters. We're exploring options like caching reasoning traces for repeated queries and streaming intermediate steps so users aren't stuck waiting for the full chain to complete before seeing any output. These optimizations will be especially important as CoT becomes a standard part of production VLM workflows.

References

- Wei, J., et al. (2022). "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models." NeurIPS 2022. arXiv:2201.11903

- Zhang, Z., et al. (2023). "Multimodal Chain-of-Thought Reasoning in Language Models." TMLR. arXiv:2302.00923

- Zhang, R., et al. (2024). "Improve Vision Language Model Chain-of-thought Reasoning." arXiv:2410.16198

- Pantazopoulos, G., et al. (2025). "Towards Understanding Visual Grounding in Vision Language Models." arXiv:2509.10345

- NVIDIA (2025). "Cosmos-Reason1: From Physical Common Sense To Embodied Reasoning." arXiv:2503.15558

.jpeg)

.png)