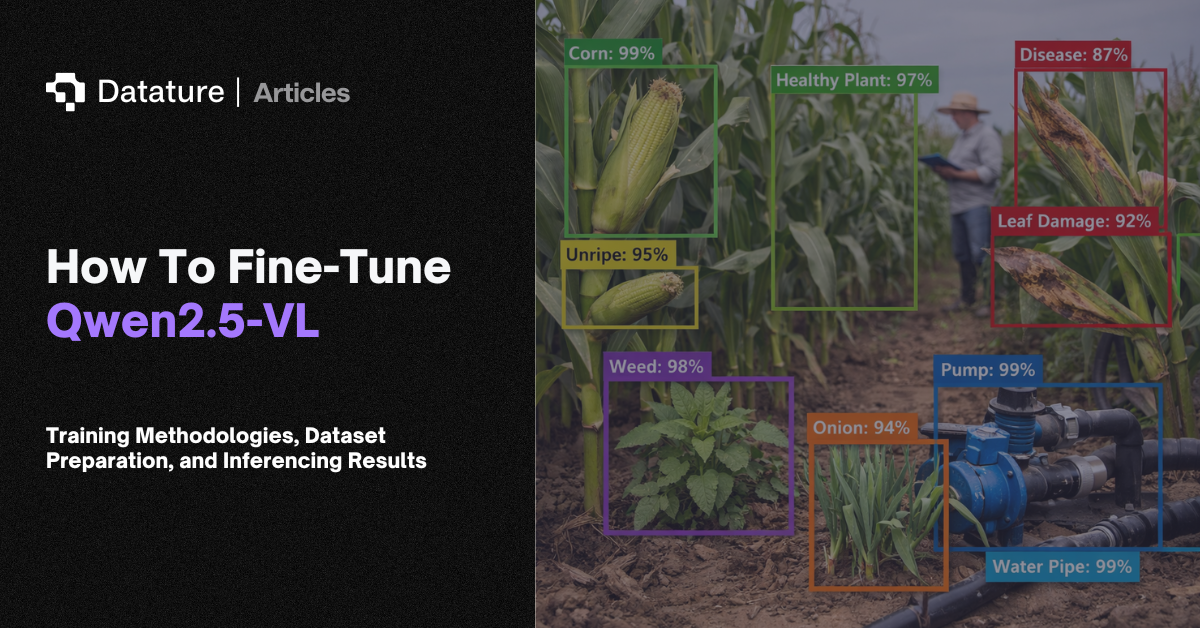

Released in January 2025, Qwen2.5-VL represents the latest advancement in the Qwen vision-language model series. Building upon the foundation established by Qwen2-VL, this new iteration introduces substantial improvements in visual perception, multimodal reasoning, and agentic functionality. With enhanced architecture, expanded training data, and support for a broader range of tasks—including document parsing, object grounding, and long-form video understanding—Qwen2.5-VL marks a significant step forward in the development of large vision-language models.

What is a Vision-Language Model

A Vision-Language Model (VLM) is a type of multimodal machine learning model designed to understand combinations of visual and linguistic inputs, then generate outputs conditioned on those inputs. Visual inputs are inputs such as image and video data, and linguistic inputs are inputs such as captions, questions, or instructions. By incorporating both data modalities, VLMs bridge the gap between computer vision and natural language processing by fusing visual and linguistic understanding together.

What is New in Qwen2.5-VL

Qwen2.5-VL distinguishes itself with four major capabilities:

- Precise object grounding with support for absolute coordinates and JSON outputs, enhancing spatial reasoning.

- Powerful omni-document parsing, enabling recognition of complex, multilingual documents including handwriting, charts, and formulas.

- Robust long video understanding, with temporal resolution scaling that allows event-level grounding in videos spanning hours.

- Enhanced agent functionality that enables sophisticated visual decision-making and tool use on both desktop and mobile platforms.

For the purposes of this article, we will be diving more into precise object grounding through the lens of dense captioning using a modification of the “Visual Genome” dataset.

What is Dense Captioning

Dense captioning is the task of simultaneously detecting multiple regions in an image and generating a natural language description for each region. This differs from captioning as captioning is executed on the image-level, and while detection is executed on the region level, the natural language description for a given region is limited to a pre-defined class.

Note that Dense Captioning is different from Phrase Grounding. A Phrase Grounding input includes an image and an image-level caption, and the model seeks to relate regions in the image to noun phrases within the image-level caption. Note that Dense Captioning does not receive aid from an image-level caption and the desired goal is simply to detect regions within an image and assign noun phrases to them–an arguably more difficult task for a VLM.

The Data

We seek to train Qwen2.5VL for dense captioning, so we select a dense captioning dataset, namely, the Visual Genome dataset, to train our model on.

Visual Genome Dataset

The Visual Genome dataset is a large, richly annotated benchmark designed to support fine-grained scene understanding in computer vision and vision-language tasks. It serves as a key resource for training and evaluating models on tasks like dense captioning, visual question answering, object detection, scene graph generation, and more.

Modified Visual Genome

Our modification of Visual Genome stems from limitations of our system. Visual Genome’s verbosity means that a single image can have upwards of 300 ground-truth regions with detailed captions for each region. Some of the regions may have large physical overlap, as well as a large semantic overlap. With limitations on output token length, selectively eliminating these overlapping ground-truth annotations is necessary to mimic the capabilities of our system.

As such, cleaning the Visual Genome happens in two steps: region-based grouping and semantic overlap suppression. Region-based grouping happens by evaluating pair-wise Box IoU scores for all bounding boxes, then grouping the bounding boxes together based on their Box IoU scores. These groups are then followed-up with semantic overlap suppression, which is defined by semantic-based grouping and representative selection. Semantic-based grouping involves using SentenceTransformer to retrieve semantic meaning in the form of an embedding so that it can be clustered. Once the embeddings are retrieved, we group the embeddings and choose a representative phrase that is closest to the median of its assigned group. This representative phrase is kept and used as a ground-truth annotation.

Cleaning the Visual Genome dataset involves two key steps: region-based grouping and semantic overlap suppression. Region-based grouping is performed by computing pairwise IoU scores between bounding boxes and clustering boxes that exhibit significant overlap. Once regional groups are formed, semantic overlap suppression is applied. This involves encoding the associated region captions using SentenceTransformer to generate semantic embeddings. These embeddings are then clustered, and for each cluster, a representative phrase is selected by choosing the phrase closest to the median embedding. This phrase is retained as the canonical ground-truth annotation for that group.

Using the above data-cleaning method, we remove anywhere from 60% to 90% of the annotations in an image while maintaining semantic enrichment.

Preparing Data for Qwen2.5-VL

To fine-tune Qwen2.5-VL for dense captioning, your dataset must be transformed into a chat-based format that mimics the conversational input Qwen2.5-VL is trained on. Each training example is structured as a sequence of message roles (system, user, and assistant) where the image and associated prompt are treated as user input, and the expected region-caption pairs are treated as assistant output.

Each sample follows this structure:

message = [

{

"role": "system",

"content": [

{

"type": "text",

"text": "You are a helpful assistant. Your task is"

" to return to the user a set of phrase"

" and bounding bounding box pairs"

" in the form of (phrase, [x1,"

" y1, x2, y2]). Format each unique"

" object that you see in the image."

" Additionally, for each object you see"

" , please provide a single phrase that"

" describes the object."

},

]

},

{

"role": "user",

"content": [

{

"type": "image",

"image": f"{point['image_src']}"

},

{

"type": "text",

"text": "Please return the phrase and bounding box"

" pairs for each unique object you"

" see in the image. Please do not"

" include duplicates.",

},

]

},

{

"role": "assistant",

"content": [

{

"type": "text",

"text": f"{

list(zip(

*point['annotations'].values()

))

}"

}

]

}

]

Here, point['image_src'] represents the local file path of an image, and list(zip(*point['annotations'].values()) represents a list of phrase-coordinate pairs for an image’s bounding boxes and associated captions. The prompt is then fed through Qwen2.5-VL’s processor, which translates the above input to a chat template, and then is tokenized for model training or inference.

Moreover, note that Qwen2.5-VL can accept multiple input images or videos as input, and has the ability to do batch processing, which involves sending multiple, individual messages to the model for batch inference. While this capability is available, it is not necessary for our task, thus, we simply do single-message, single-image processing. For more information, please see Qwen2.5-VL’s Github Repository.

Finetuning Qwen2.5-VL

While Qwen2.5-VL does provide multiple model sizes, ranging from 3 billion to 72 billion parameters, our system is unable to fine-tune the smallest model without leveraging training strategies that reduce computational requirements. For our experiment, we have access to an RTX 4070 with 12GB of RAM, for which we leverage LoRA (Low-Rank Adaption) and model quantization to successfully proceed with fine-tuning.

LoRA and Quantization

Training large vision-language models like Qwen2.5-VL requires large computational resources. Thus, training these large models face constraints related to memory, compute time, and hardware access, particularly when using consumer-grade GPUs.. To overcome these limitations, we leverage two strategies: Low-Rank Adaptation (LoRA) and quantization. Together, these strategies allow for efficient fine-tuning of large models by reducing memory overhead and computational complexity with negligible impact to performance.

LoRA

Low-Rank Adaptation (LoRA) is a parameter-efficient fine-tuning technique that injects trainable low-rank matrices into each layer of a transformer architecture. Instead of updating all parameters of a large model, LoRA introduces a small number of trainable weights while freezing the original model weights. This dramatically reduces the number of trainable parameters and memory requirements, making fine-tuning feasible on lower-resource hardware.

In the case of Qwen2.5-VL and our fine-tuning, LoRA is used to train the rank decomposition weight matrices in the self-attention module that re-parameterise W_q and W_v. Furthermore, we use a rank of four and an alpha of 16, defining the rank of the decomposition weight matrices and the scale-factor for the newly learned weights. Together, these allow the model to adapt to dense captioning tasks without full retraining, remedying the requirement of significant computational resources.

Quantization

Quantization is another technique that reduces the precision of model weights and activations from 32-bit floating point to lower-bit precision, such as 4-bit or 8-bit, significantly decreasing memory usage and speeding up computation. We use the following BitsAndBytes (BnB) configuration:

bnb_config = BitsAndBytesConfig(load_in_4bit=True,

bnb_4bit_quant_type="nf4", bnb_4bit_use_double_quant=True,

bnb_4bit_compute_dtype=torch.bfloat16).This allows Qwen2.5-VL to be loaded and fine-tuned on commodity GPUs, like our RTX 4070, while preserving most of its original performance. Quantization is especially critical for vision-language models due to their large size and the high memory demands of processing both visual and textual inputs.

Fine-tuning Process

We fine-tuned Qwen2.5-VL using a LoRA-based adapter approach combined with 4-bit quantization to reduce the memory footprint and enable training on a single RTX 4070 with 12GB VRAM. The model was loaded using Hugging Face’s transformers and peft libraries, with quantization applied via BitsAndBytes

Each training sample consisted of a conversation-like input containing an image, a prompt, and the expected dense captions paired with bounding boxes. This was formatted to follow Qwen2.5-VL’s multi-modal chat template, which was then tokenized using the model's processor. The assistant’s response contained the list of phrase and bounding box pairs formatted as (phrase, [x1, y1, x2, y2])

We trained the model using its default cross-entropy loss over the output tokens, updating only the LoRA adapter weights. We applied a rank of 4 and an alpha of 16 in LoRAConfig, targeting W_q and W_v projections within the attention mechanism. We used a batch size of 1 (with gradient accumulation steps to simulate larger batches) due to limited compute, AdamW optimizer, and learning rate warm-up. We then trained the model over 10 epochs, using a dataset of 1250 training samples, which took around 3 hours to train. We release our open-source code here for ease-of-use.

Evaluation

Results

Now that our model is finetuned on dense captioning, we showcase the results below in the “Visualized” Results section. From our results, one can see that our model successfully trained on the dense-captioning task, but with a few errors that we get into below.

Elaboration on Errors

The errors seen below may be attributed to multiple factors, including our dataset and loss function.

With regards to our loss function, our model might also be rewarded for repetition. As talked about later, most VLMs and LLMs use cross-entropy loss on the token-level, meaning that our model is rewarded for predicting tokens for where they are ordered in the ground-truth annotation, regardless of phrase or bounding box correctness. This means that if our ground-truth has [(phrase1, [x1, y1, x2, y2]), (phrase2, [x3, y3, x4, y4])], but we output [(phrase2, [x3, y3, x4, y4]), (phrase1, [x1, y1, x2, y2])], we will incur a high loss. However, if our model produces [(phrase1, [x1, y1, x2, y2]), (phrase1, [x1, y1, x2, y2])], then we will incur a lower loss because we correctly predicted the first phrase-bounding box pair, despite our repetition. Thus, optimizing for token loss here is not a great metric for VLM fine-tuning, but these are research topics currently being pursued.

Visualized Results

Here is a sample of our results. We can see that most of the annotations are good dense-captioning outputs – given that we only tell the model to output any objects that it sees. Some non-ideal outputs here are: 71, 73, and 77 repeating the same output with similar bounding boxes; 74 identifying prosthetic legs as boots; 69 being identified as red shoes instead of a wrap on a prosthetic leg; and 70 using “watermark” to mean a “wave”.

64: "a pair of water skis",

65: "a blue truck parked by the grass",

66: "red and white striped cord",

67: "a blue truck parked by the grass",

68: "a man wearing a yellow shirt",

69: "a man with red shoes",

70: "a watermark on the picture",

71: "man wearing a yellow shirt",

72: "the water is blue",

73: "a man wearing a yellow shirt”,

74: "a pair of boots",

75: "a black boat in the water",

76: "man wearing black pants",

77: "a mean wearing a yellow shirt"

Here is another sample of our results. We can see that most of the annotations are good dense-captioning outputs, similar to the previous results. Like before, we have some non-ideal results, namely repetitive annotations of 56 and 59, as well as 58 and 62. Moreover, the coffee cup in 60 is identified as a bottle rather than a coffee cup. However, again, given that we only tell the model to report back any objects it finds without any other prompting, it seems the model is doing fairly well.

55: "the cooler is blue and white",

56: "the laptop is pink",

57: "the car is parked",

58: "the car is blue",

59: "the laptop is pink",

60: "the bottle is red",

61: "the radio is black",

62: "the car is blue",

63: "the steering wheel",

Here is another sample of our results. We can see that most of the annotations are good dense-captioning outputs, similar to the previous results. Like before, we have some non-ideal results, namely repetitive annotations of 56 and 59, as well as 58 and 62. Moreover, the coffee cup in 60 is identified as a bottle rather than a coffee cup. However, again, given that we only tell the model to report back any objects it finds without any other prompting, it seems the model is doing fairly well.

Here is a final sample of our results. We can see that most of the annotations are good dense-captioning outputs, similar to the two previous results. Like before, we have repetitive annotations of 15, 18, and 21, as well as 13, 16, and 20.

13: "horse splashing through water",

14: "a person riding a horse",

15: "a rider is wearing a blue and white shirt”,

16: "the horse is splashing water",

17: "a shadow of a horse on the ground",

18: "the rider is wearing a blue and white jacket”,

19: "the horse is splashing water",

20: "a large black horse",

21: "the rider is wearing a blue and white jacket",

22: "water puddle the horse is walking through",

23: "water splashing up around the horse",

Shortcomings of Language Model Fine-tuning for Dense Captioning

Limited Output Token Length

One of the primary constraints when fine-tuning VLMs and LLMs is the limited output token length, which refers to the maximum number of tokens the model is capable of generating in a single forward pass. This is particularly an issue for dense captioning since it inherently requires the model to output a large number of region-level descriptions, each accompanied by its bounding box pair. As the number of regions increases, so does the size of the expected output – especially when phrases are relatively descriptive. Ultimately, this means that without increasing our output token length, we would fail to identify many objects in the image. This could be remedied by increasing the output token length, but this increases compute cost and may incentivize repetition in the generated output.

Inaccurate Loss Functions

VLMs trained for dense captioning currently suffer from inaccurate loss functions, where outputs that are semantically and spatially correct can still be penalized due to small variations in ordering or phrasing. These models also typically rely on token-level cross-entropy loss, which fails to evaluate bounding boxes directly, resulting in a disconnect between token-level accuracy and task-specific quality. As a result, exploration or design of loss functions that directly incorporate object-level metrics, such as IoU-aware or phrase similarity-aware objectives.

Lack of Innate Confidence Scoring

One notable limitation of VLMs, and LLMs alike, is their inability to natively assign confidence scores to its predictions, such as our bounding box–phrase pairs in dense captioning. Unlike traditional object detectors (e.g., Faster R-CNN, YOLO, or DETR), which are explicitly designed to produce class scores or probability distributions over object categories, Qwen2.5-VL is a generative, decoder-style vision-language model. As such, it generates outputs token-by-token via language modeling objectives without an internal mechanism for estimating certainty or probability over structured outputs. While there are some methods to obtain confidence-like scoring, these methods aren’t well defined and don’t necessarily correlate to correctness. Thus, other post-training evaluation methods are needed to evaluate the performance of a VLM like those mentioned above.

Hallucinations and Repetition

Even after fine-tuning, Qwen2.5-VL exhibits difficulty identifying certain complex or less frequent objects, particularly in cluttered scenes or when object boundaries are ambiguous. Additionally, the model often repeats bounding boxes or predicted phrases, leading to redundant or low-diversity outputs. This repetition is common in VLMs and LLMs, especially when output formatting is rigid or when diversity is not directly optimized during training.

Bounding Box Representations

During our experiments, there were multiple representations for our bounding box coordinates that we experimented with to determine if a particular representation yielded better results than another.

Absolute Bounding Box Coordinates

These are defined as (x1, y1, x2, y2) using absolute image size, where the coordinates are measured in pixels relative to the image dimensions. This is the most direct way to represent bounding boxes but can vary across images of different sizes.

In our experiments, absolute bounding box coordinates suffered from a couple issues. First, the fine-tuned model sometimes predicted bounding box coordinates outside the image itself, especially when a detected object had a boundary near an image border. This, however, can mostly be solved by clipping the output coordinates to the image bounds. Second, when images are highly repetitive, see image below, the model will focus on a specific point in the image rather than spread out the detections. There isn’t much we can do about this other than prompting, but even explicitly instructing the model didn’t yield better results.

Normalized Bounding Box Coordinates

In this format, the bounding box is represented as (x1_n, y1_n, x2_n, y2_n) using normalized (between 0 and 1) image coordinates. Each coordinate is scaled by the image width or height, enabling more consistent representation across images of varying resolutions.

In our experiments, normalised bounding boxes suffered significantly from repetitive suggestions. Even in images without significant repetition, the model repeated the same phrases with slightly different coordinates. We hypothesize that this could be due to the lack of representation of normalised coordinates during the original pretraining phase. Since the model was likely not exposed to normalised spatial patterns, it tends to overfit to repetitive structures and fails to generalise across diverse object arrangements, particularly in scenes with dense layouts. However, without access to Qwen2.5-VL pretraining data, we cannot confirm this.

Discritised Bounding Box Coordinate Space

Discretized coordinates are written as (locXXXX, locXXXX, locXXXX, locXXXX) where locXXXX is a token and XXXX is the token identifier, where there are usually 1024 token identifiers. This representation is common in language-model-style tokenization approaches, allowing coordinate values to be embedded directly into a model’s token sequence. A model that leverages this technique is PaliGemma, which can be read about in more detail in our Introduction to PaliGemma 2 and our Primer on Fine-Tuning PaliGemma blog articles.

In our experiments, introducing new tokens for discretised bounding box coordinates lead to an inability to detect anything. This is likely attributed to how the addition of new tokens is executed and our subsequent training process. When tokens are added to a model, it is standard to initialise the new token embeddings to be the mean of all existing token embeddings, then we fine-tune the model to warm these tokens. However, in our experiments, the subsequent fine-tuning seems to make the model worse, where the model starts outputting the user’s original prompt. This may be as simple as needing to fine-tune more, but with limited computation, the task of fully fine-tuning to warm these tokens is not a feasible one.

Is There An Easy Way To Fine-Tune Qwen2.5 VL?

Fine-tuning your own VLMs can be daunting, and most of the toolings out there are still in development or fragmented. Which is why at Datature, we build Vi - an end-to-end platform that allows users to fine-tune a variety of VLMs on their own dataset easily. Check out our introduction and tutorial below ↘

What’s Next?

If you have questions, feel free to join our Community Slack to post your questions or contact us to finetune your own Qwen2.5-VL model on Datature Vi.

For more detailed information about the model functionality, customization options, or answers to any common questions you might have, read more on our Developer Portal.

Developer’s Roadmap

Datature recognizes the importance of multimodal models in practical use cases. With this in mind, we will be incorporating the Qwen2.5-VL architecture for fine-tuning, such that Datature Nexus users will be able to import and annotate multimodal datasets that can be used to train a Qwen2.5-VL model for their specific use cases. To learn more about the underlying Qwen2.5-VL architecture and performance, we refer you to the Qwen team’s Qwen2.5-VL Technical Report. We also note that while achieving academic benchmarks is significant, practical deployment still requires other considerations such as guard railing to ensure consistency and quality.

.png)

.png)

.png)