Introduction to D-FINE

D-FINE represents a leap forward in object detection, refining traditional bounding box regression with advanced techniques. Unlike prior models, which often rely on coarse or rigid localization methods, D-FINE uses a novel Fine-grained Distribution Refinement (FDR) approach to iteratively adjust bounding boxes with precision. This, combined with Global Optimal Localization Self-Distillation (GO-LSD), enables the model to achieve remarkable accuracy, speed, and computational efficiency. As a result, D-FINE outperforms many existing models in real-time applications, making it particularly suitable for resource-constrained scenarios like mobile or edge devices.

%252520(1).webp)

DETR vs. CNN-Based Approaches

Detection Transformer (DETR)-based approaches like D-FINE offer a fundamental rethinking of object detection. Unlike traditional CNN-based methods like YOLO or RetinaNet, which often rely on predefined components such as anchor boxes, fixed grid structures, or non-maximum suppression (NMS), DETR achieves detection through a transformer-based architecture that outputs object predictions as a set. Leveraging transformers’ ability to model relationships across the entire image allows these additional components to be integrated into the model’s design.

What Makes D-FINE Better?

D-FINE addresses several limitations of traditional object detection methods:

- Precision with Fine-Grained Adjustments

The Fine-grained Distribution Refinement (FDR) mechanism iteratively refines the bounding box distributions. By focusing on independent edge adjustments and leveraging a non-uniform weighting function, this approach captures uncertainties with greater accuracy. This ensures finer adjustments for small objects and resolves localization challenges.

.webp)

- Anchor-Free Design

Unlike anchor-based frameworks that limit flexibility, D-FINE integrates seamlessly with anchor-free architectures like DETR. This reduces dependency on predefined anchor boxes, making the model more adaptable.

- Knowledge Distillation for Efficiency

Global Optimal Localization Self-Distillation (GO-LSD) is a knowledge distillation technique that leverages the refined predictions of a model's final layer to improve the learning of earlier layers. This is done through matching and aggregating the most accurate candidate predictions from every stage in the model into sets - this ensures that they all benefit from the distillation process. Both matched and unmatched predictions are then refined in a global optimization step. To deal with low-confidence predictions, a new Decoupled Distillation Focal (DDF) Loss is introduced, which weights the matched and unmatched predictions and balances their overall contribution, improving model stability and performance

.webp)

- Lightweight and Fast

D-FINE optimises computationally intensive modules to deliver high-speed performance without sacrificing accuracy. This balance makes it ideal for real-time applications.

Open-Source Licensing

While other popular real-time object detectors like YOLOv8 and YOLO11 offer impressive performance, their adoption is often restricted by their licences. Both models fall under the AGPL-3.0 (Affero General Public License), which imposes strict requirements, particularly for applications involving SaaS (Software-as-a-Service).

In contrast, D-FINE is distributed under the more permissive Apache 2.0 Licence. This licence allows for free use, modification, and distribution of the software, even for commercial purposes, without requiring users to open-source their derivative works. This makes D-FINE an attractive choice for organisations looking to integrate cutting-edge object detection capabilities while retaining control over proprietary innovations.

Useful Real-Time Applications

Real-time object detection with D-FINE offers transformative capabilities for a variety of high-impact computer vision applications. Its blend of accuracy, speed, and efficiency makes it suitable for numerous domains:

- Autonomous Vehicles

Vehicles need to identify pedestrians, obstacles, traffic signals, and other vehicles with precision in dynamic environments. D-FINE’s fine-grained localization ensures robust detection of small or overlapping objects while maintaining real-time performance crucial for navigation and decision-making.

- Drone Surveillance & Monitoring

Drones are revolutionising industries such as agriculture, security, and logistics, but they demand efficient, lightweight object detection systems for real-time operation. D-FINE addresses these challenges effectively in tasks like crop health monitoring, where it can identify anomalies (e.g., pest infestations or drought stress) in real-time with pinpoint accuracy, enabling targeted interventions that reduce waste and improve yield.

- Industrial Automation

Industrial environments require high-speed and reliable object detection to optimise processes and reduce errors. On assembly lines, D-FINE’s fine-grained bounding box adjustments enable it to spot subtle defects in products, such as scratches, deformations, or alignment issues, ensuring consistent quality control without slowing down operations. Furthermore, by analysing equipment through real-time video feeds, D-FINE can detect wear-and-tear indicators like leaks or misalignments, allowing for timely interventions and reduced downtime.

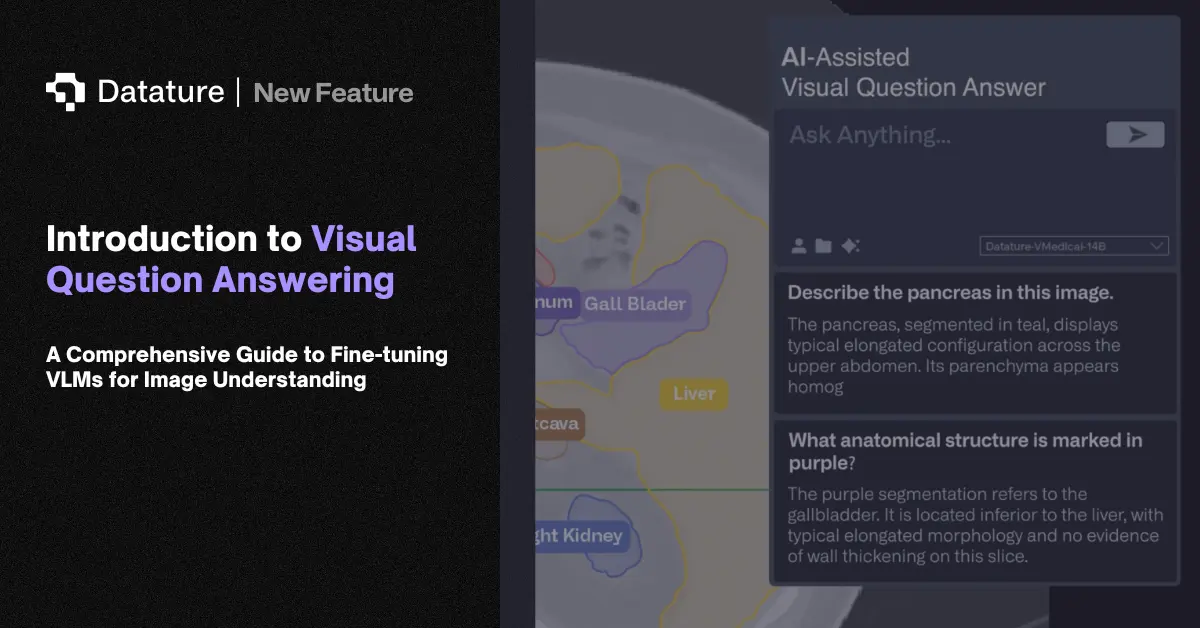

Fine-Tuning D-FINE On Your Custom Data

You can now fine-tune D-FINE models on your own custom datasets on Datature Nexus. In this example we’ll show you how to fine-tune a model to detect defects in aerial infrared scans of solar panels.

.webp)

In defect inspection, the subtle nature of defects poses a challenge for accurate detection. The diversity in defect classes also calls for a need for adaptable models that can generalise well to unseen data. Hence, it is crucial to train a robust model that can minimise loss in efficiency - and translating to loss in operational profits - in the energy output of solar panels.

.webp)

Building Your Fine-Tuning Workflow

We’ll build a training workflow for D-FINE Nano with an input resolution of 320x320 pixels. The pre-trained weights used are tuned from the COCO dataset. We’ll be fine-tuning this on our custom solar panel images for 5000 steps, with a batch size of 8. Given our training set of 150 images, this translates to just approximately 400 epochs. The default optimizer is AdamW with a learning rate of 0.00034. Though this value is slightly lower than the default used in the paper, this helps to stabilise training losses and minimise scenarios where the gradients may explode.

.webp)

Monitoring Model Evaluation Metrics

For comparison, we’ve set up an identical training run with YOLO11 Nano, with an input resolution of 320x320 pixels, and maintaining the same hyperparameters. The seed-lock feature in Nexus simplifies the setup of test controls, ensuring that all models are exposed to the same training images at precisely the same training step, provided the batch size remains consistent. This allows for a fair and accurate comparison of model performance.

%252520(4).webp)

When analysing the mean-average precision (mAP) of the model on positive defect classes, YOLO11 displayed strong performance on the class Shadowing with a score of 0.802. As an highly-evolved architecture across the decade since its inception, it continues to serve as a solid benchmark on striving for accuracy for real-time object detectors.

.webp)

D-FINE, on the other hand, similarly achieved an impressive score of 0.898 on the class Shadowing, showcasing its robust capabilities in detecting small and difficult to identify defects in images. Using our Evaluation Preview feature in our Advanced Evaluation suite to visualize model predictions on the evaluation dataset also shows that the majority of predictions are quite accurate.

.webp)

Understandably, both models did not perform as well on the other defect classes. Upon analyzing the images with lowest-confidence predictions in the evaluation dataset, we see that this is attributed to the low representation of these defect classes in the dataset. This occurs in the real world when certain defects do not appear often and data is tedious to collect. Nevertheless, this can be easily solved through techniques such as data upsampling, augmentations, and even leveraging Generative AI for synthetic data.

.webp)

Performance Comparison with YOLO11

Next, we exported both models to the ONNX format and ran inference on 100 test images. D-FINE Nano had a size of ~14MB, while YOLO11 Nano had a size of ~5MB. This is attributed to D-FINE’s use of transformer architectures with higher number of parameters due to their use of self-attention mechanisms, which require maintaining large key, query, and value matrices. Additionally, transformers often include extensive feed-forward layers and embedding layers. Despite this difference, D-FINE’s average inference speed per image (38.1ms) is competitive with that of YOLO11 (37.3ms), achieving the goal of real-time inference. To push the boundaries even further, D-FINE also allows for model optimization techniques such as quantization and pruning to compress the model size and increase inference throughput.

.webp)

While D-FINE’s performance may seem comparable with that of YOLO11 based on our non-exhaustive testing, the key differentiator lies in the open-source licensing that enables anyone to leverage the power of a real-time object detector in any use case possible. The impact of such open-source models drives both research and industry forward, paving the way for better state-of-the-art models and techniques, as well as empowering organisations to leverage Computer Vision to drive up operational efficiency and bring down business costs

What’s Next?

Datature is constantly looking to incorporate state-of-the-art models that can boost accuracy and inference throughput. We are actively looking for new DETR-based models that push the frontiers of object detection, as well as explore how these models can be tweaked for other tasks such as segmentation and pose estimation.

Our Developer’s Roadmap

If you have questions, feel free to join our Community Slack to post your questions or contact us if you wish to learn more about training a D-FINE model on Datature Nexus.

For more detailed information about the D-FINE architecture, customization options, or answers to any common questions you might have, read more on our Developer Portal.

.jpeg)